2018-07-16 13:00:21 +08:00

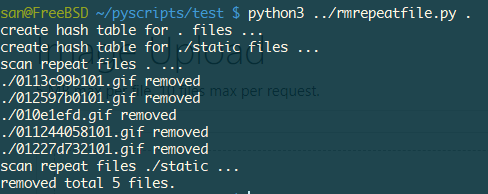

收了很多二次元图片,难免会有重复的。 写了个小脚本删除重复的。

#! /bin/env python

# -*- coding:utf-8 -*-

import sqlite3

import hashlib

import os

import sys

def md5sum(file):

md5_hash = hashlib.md5()

with open(file,"rb") as f:

for byte_block in iter(lambda:f.read(65536),b""):

md5_hash.update(byte_block)

return md5_hash.hexdigest()

def create_hash_table():

if os.path.isfile('filehash.db'):

os.unlink('filehash.db')

conn = sqlite3.connect('filehash.db')

c = conn.cursor()

c.execute('''CREATE TABLE FILEHASH

(ID INTEGER PRIMARY KEY AUTOINCREMENT,

FILE TEXT NOT NULL,

HASH TEXT NOT NULL);''')

conn.commit()

c.close()

conn.close()

def insert_hash_table(file):

conn = sqlite3.connect('filehash.db')

c = conn.cursor()

md5 = md5sum(file)

c.execute("INSERT INTO FILEHASH (FILE,HASH) VALUES (?,?);",(file,md5))

conn.commit()

c.close()

conn.close()

def scan_files(dir_path):

for root,dirs,files in os.walk(dir_path):

print('create hash table for {} files ...'.format(root))

for file in files:

filename = os.path.join(root,file)

insert_hash_table(filename)

def del_repeat_file(dir_path):

conn = sqlite3.connect('filehash.db')

c = conn.cursor()

for root,dirs,files in os.walk(dir_path):

print('scan repeat files {} ...'.format(root))

for file in files:

filename = os.path.join(root,file)

md5 = md5sum(filename)

c.execute('select * from FILEHASH where HASH=?;',(md5,))

total = c.fetchall()

removed = 0

if len(total) >= 2:

os.unlink(filename)

removed += 1

print('{} removed'.format(filename))

c.execute('delete from FILEHASH where HASH=? and FILE=?;',(md5,filename))

conn.commit()

conn.close()

print('removed total {} files.'.format(removed))

def main():

dir_path = sys.argv[-1]

create_hash_table()

scan_files(dir_path)

del_repeat_file(dir_path)

if __name__ == '__main__':

main()

这是一个专为移动设备优化的页面(即为了让你能够在 Google 搜索结果里秒开这个页面),如果你希望参与 V2EX 社区的讨论,你可以继续到 V2EX 上打开本讨论主题的完整版本。

V2EX 是创意工作者们的社区,是一个分享自己正在做的有趣事物、交流想法,可以遇见新朋友甚至新机会的地方。

V2EX is a community of developers, designers and creative people.